AI - the unreliable narrator

Introduction

ChatGTP has been around for only a few weeks, yet it has gone viral and led to a lot of claims about what it can do now and what it potentially can do in the near future. My recent posts have been looking at how we can use AI tools in education - for teaching and learning as well as in reducing workload for educators. In this week's post, I am going to look at the reliability of AI and show that we can leverage these flaws to teach learners how to learn.

Unreliable Narrators

I like to describe AI tools, such as ChatGPT, as being unreliable narrators. In fiction, an unreliable narrator is an untrustworthy storyteller. The story is typically told in first person, and the fact that the narrator is unreliable adds hugely to the story and the reader's realisation of what is happening. Key to this is that the reader is not told about what parts are unreliable or not. They need to work it out and then use that to enrich the experience of the story.

The content produced by AI tools is similar to a novel's unreliable narrator, and, as with the reader of a novel, you need to have experience to determine whether what is being said is true or not; otherwise, you will believe everything said by the narrator.

With ChatGPT (and other AI tools) you cannot be completely sure whether the generated content is true or not. This is because the AI is not actually intelligent- it is effectively a huge algorithm that predicts the most likely word to come next given an area of focus. Note - it's better, I think, to refer to AI as being augmented intelligence instead. It's a writing app rather than a thinking one. But it will write its response in a very authoritative way, which can lead the user to believe it.

It's better, I think, to refer to AI as being augmented intelligence instead.

If you are an expert in the area of the generated content, then checking and correcting is relatively quick. If you are not an expert, you will not know whether what has been generated is correct or not. Given this is correct, how can we leverage this in the education space?

Scenarios in Education

1) Double-checking challenges

When it comes to calculating, double-checking is a must, especially when working with AI tools like ChatGPT. Encourage your students to put their number-crunching skills to the test by double-checking their calculations. For example, if they multiplied two positive integers, the result should be a larger number. Though ChatGPT may produce a seemingly correct answer, it's not always accurate with numbers. By double-checking, students can not only identify errors but also learn to spot when ChatGPT is being unreliable. It's an important way to sharpen critical thinking skills and gain a deeper understanding of the material.

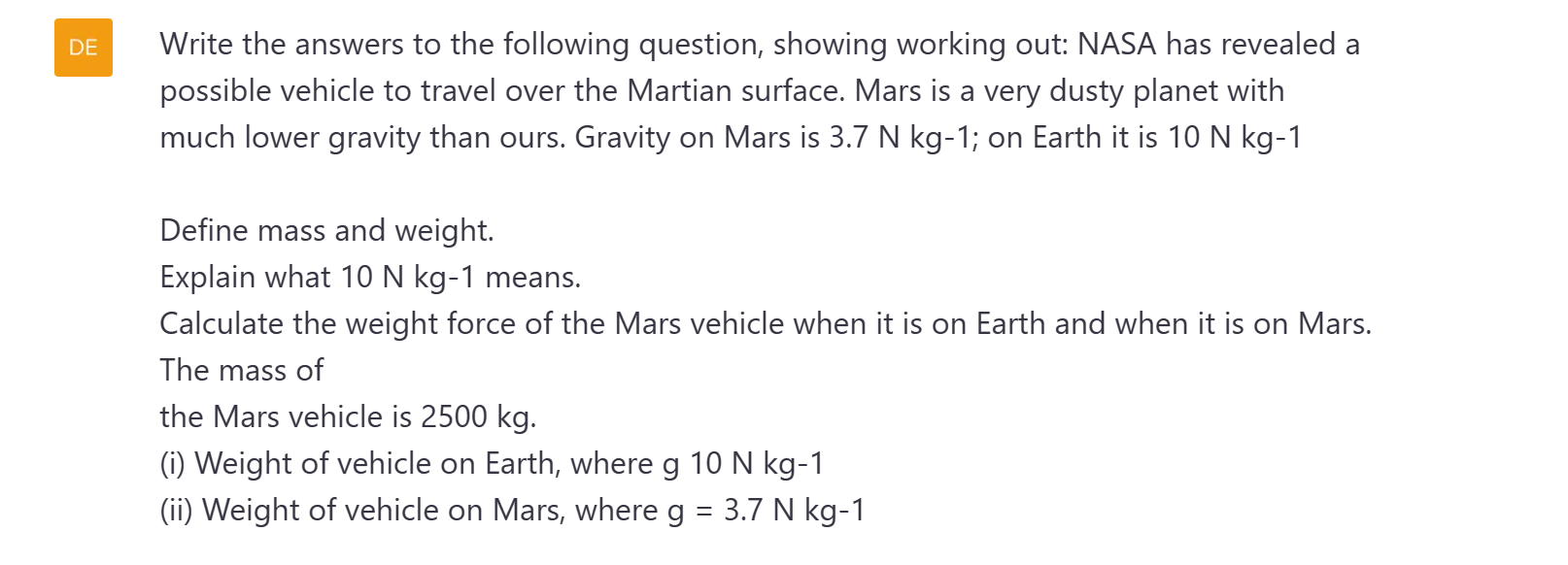

An example of how ChatGPT answers questions is given below:

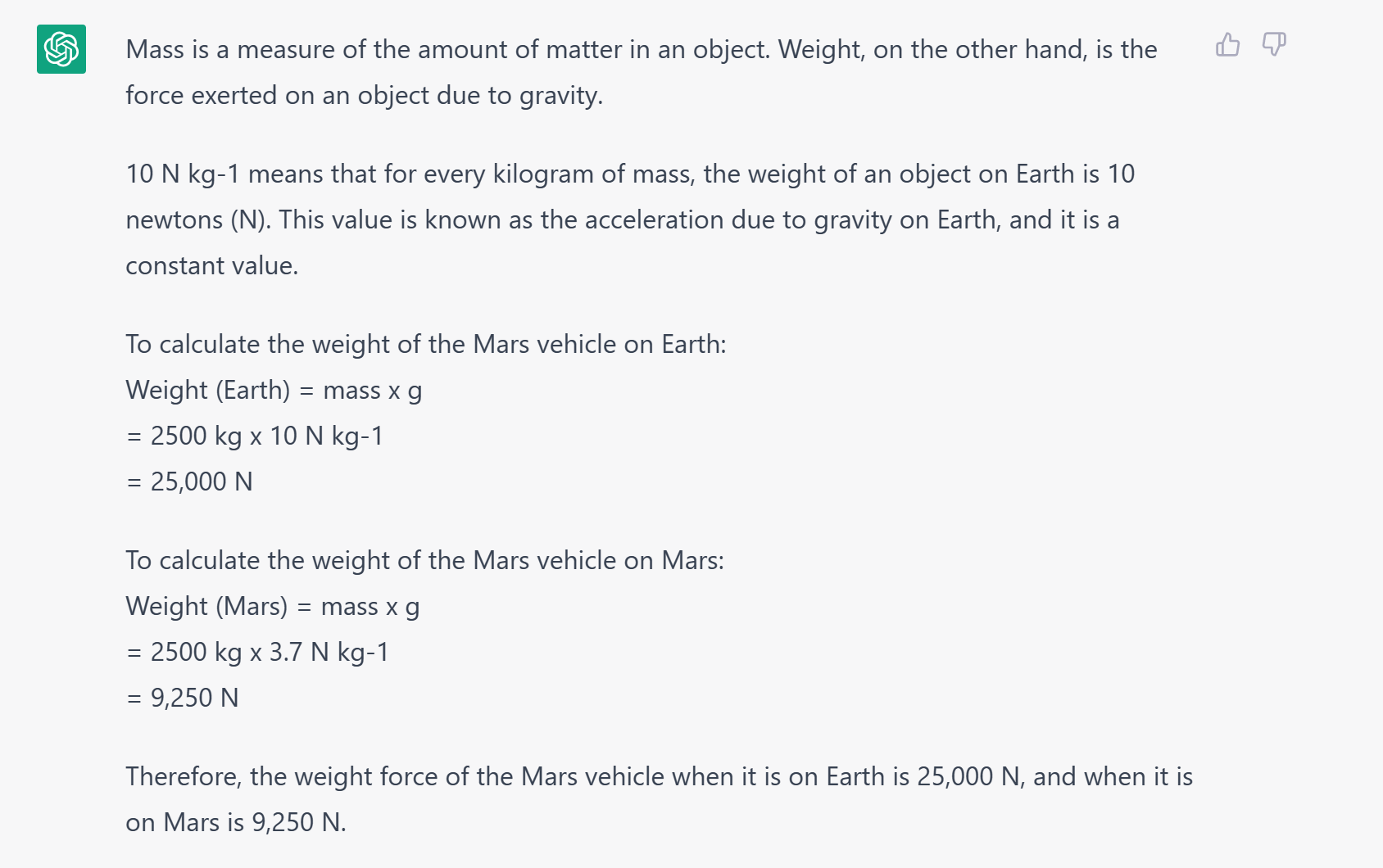

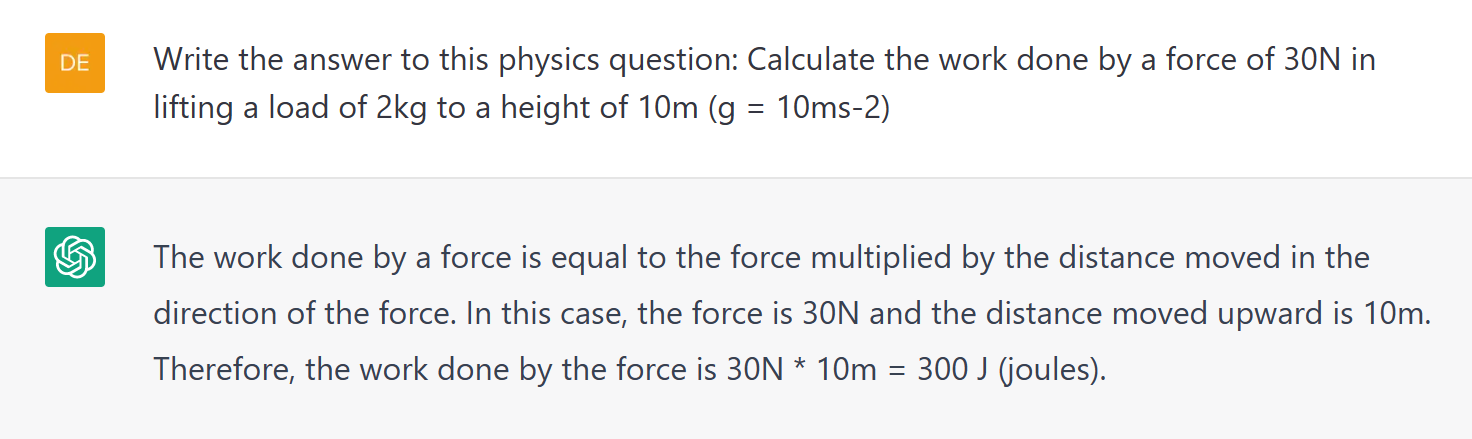

The above example, from a real NCEA exam paper from 2020, is correct. But there have been plenty of times when, for some unknown reason, it has been miscalculated. In my blog on AI Text, I mentioned this question which appears in a number of revision sites:

However, I have also had other answers given including one that incorrectly calculates that the work is going to be 200J (it has got confused with the mass being 2kg):

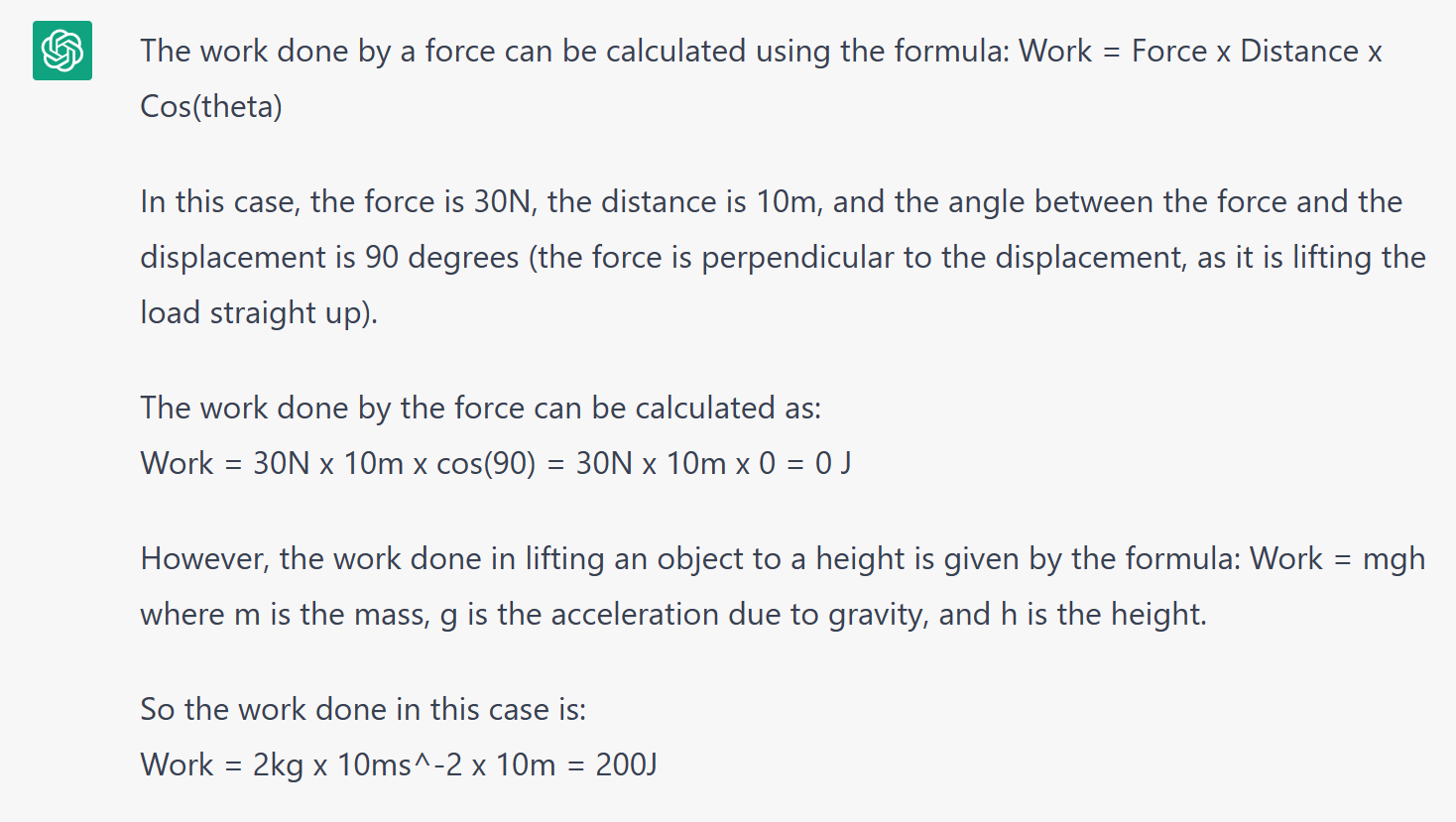

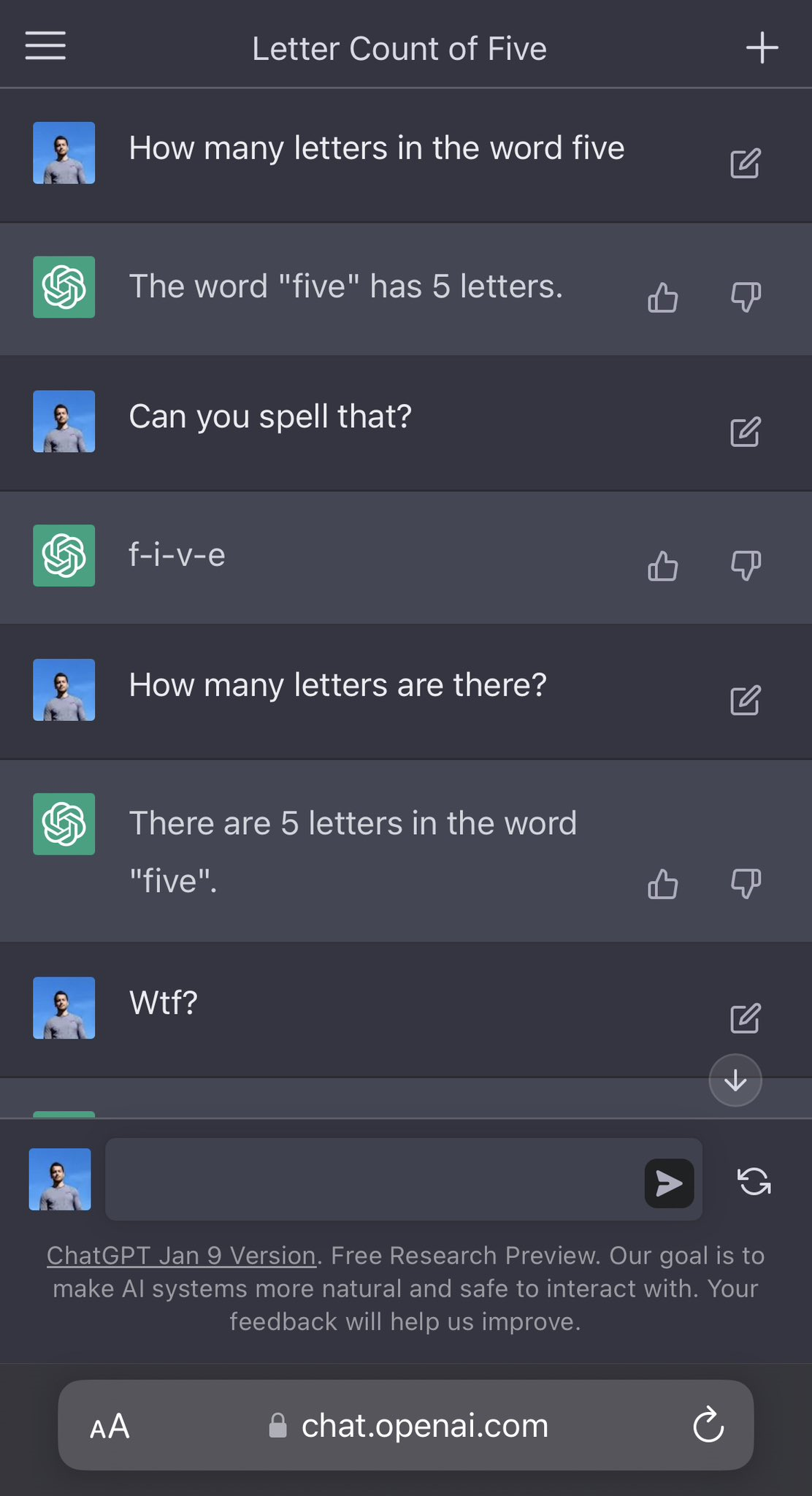

Recently I became aware that ChatGPT seemed to have a problem with counting. This is another example where ChatGPT is insistent when it is correct (from Kyriakos Eleftheriou) - it keeps insisting that there are five letters in the word five. Note- if you try this on ChatGPT you will no longer get the error. It is a public-beta research tool and is still being updated and trained.

2) The Truth Behind the Source

Fact-checking is crucial regarding information produced by ChatGPT and other AI tools. Although AI may seem like a reliable narrator, its weakness lies in the sources it cites. By carefully examining the sources, you can uncover the truth and separate fact from fiction. This is not just about catching inaccuracies, it's about gaining a deeper understanding of the information and training yourself to be a savvy evaluator of sources.

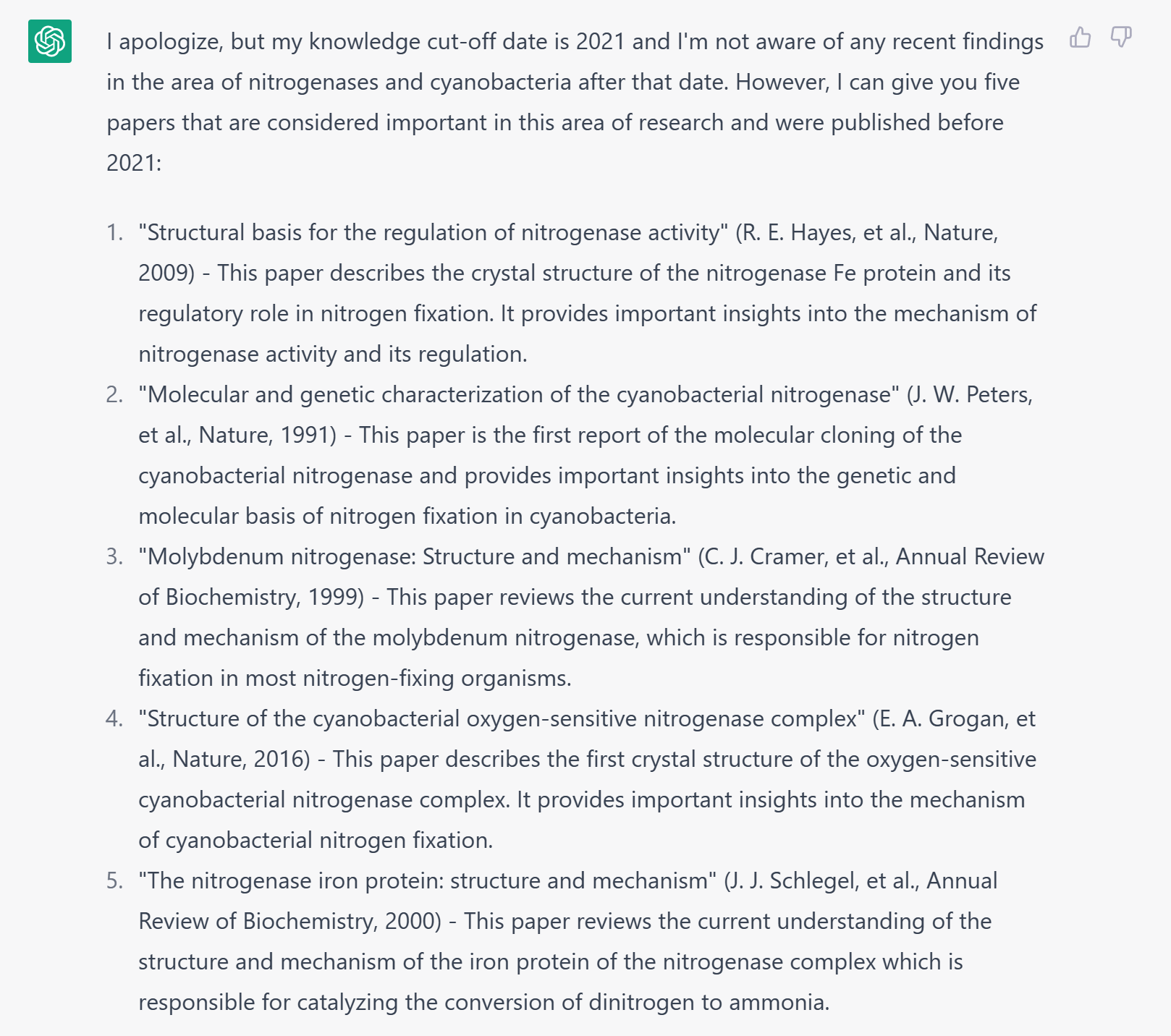

This is a question that I asked ChatGPT:

I am interested in the latest findings about nitrogenases and cyanobacteria. Please give me the five most important papers in this area of research, why they are important and give me the references in APA format.

This is what it generated:

Looks great. It has given me a warning that it cannot provide information beyond 2021 (which is correct) and has then given me a list of research papers. Before I went into teaching, I researched nitrogenases in cyanobacteria - so I recognised the journals. The titles also seemed appropriate. However, I did not recognise any of the author names - which, as a former expert in that area, is a red flag. Not only that but the DOI links all lead to different papers or are broken. Going the extra step and entering the titles into Google Scholar shows that everything here has been made up. Try it out for yourself:

References:

- Hayes, R. E., van der Meer, A. D., & Albers, S. V. (2009). Structural basis for the regulation of nitrogenase activity. Nature, 462(7271), 717–723. https://doi.org/10.1038/nature08564

- Peters, J. W., Chen, J., & Seefeldt, L. C. (1991). Molecular and genetic characterization of the cyanobacterial nitrogenase. Nature, 351(6328), 714–719. https://doi.org/10.1038/351714a0

- Cramer, C. J., & Seefeldt, L. C. (1999). Annual Review of Biochemistry, 68(1), 945–980. https://doi.org/10.1146/annurev.biochem.68.1.945

- Grogan, E. A., et al. (2016). Structure of the cyanobacterial oxygen-sensitive nitrogenase complex. Nature, 538(7624), 216–221. https://doi.org/10.1038/nature19812

- Schlegel, H. G., & Howard, J. B. (2000). The nitrogenase iron protein: structure and mechanism. Annual Review of Biochemistry, 69(1), 971–1004. https://doi.org/10.1146/annurev.biochem.69.1.971

How does this flaw help in education? Well, I would demonstrate this to students and then teach them how to use Google Scholar (or, even better, Connected Papers) to track the authenticity of the links. This is what researchers do, and in a world where we are going to have AI-generated content, possibly to promote pseudoscience and conspiracy theories, this is needed too!

Incidentally - I have also found the same problem with referencing with You.com's YouChat as well as Luna in Moonbeam - both are excellent AI tools)

3) Uncovering the Cheater's Game

As an educator, you can use ChatGPT to catch students who might be trying to cheat by using AI to generate their work. One of the telltale signs of AI-generated content is the use of fake references. By requiring students to include references in their work, you can ensure they are truly engaging with the content they are learning. This is not just about catching cheaters, it's about fostering a deeper understanding of the material and encouraging academic integrity.

4) Fact-Checking Frenzy

You can also turn your classroom into a battleground of truth and accuracy by having students use ChatGPT to generate explanations on a topic (such as Mitosis) and then challenge them to fact-check each sentence. They can start by rating the explanation's accuracy based on their own understanding and then verify their findings with non-AI sources. Not only will this exercise improve their research skills, but it will also make them more critical consumers of information in an age of misinformation.

Incidentally, using ChatGPT previously this information could have come from a textbook and is one of the potential benefits of ChatGPT.

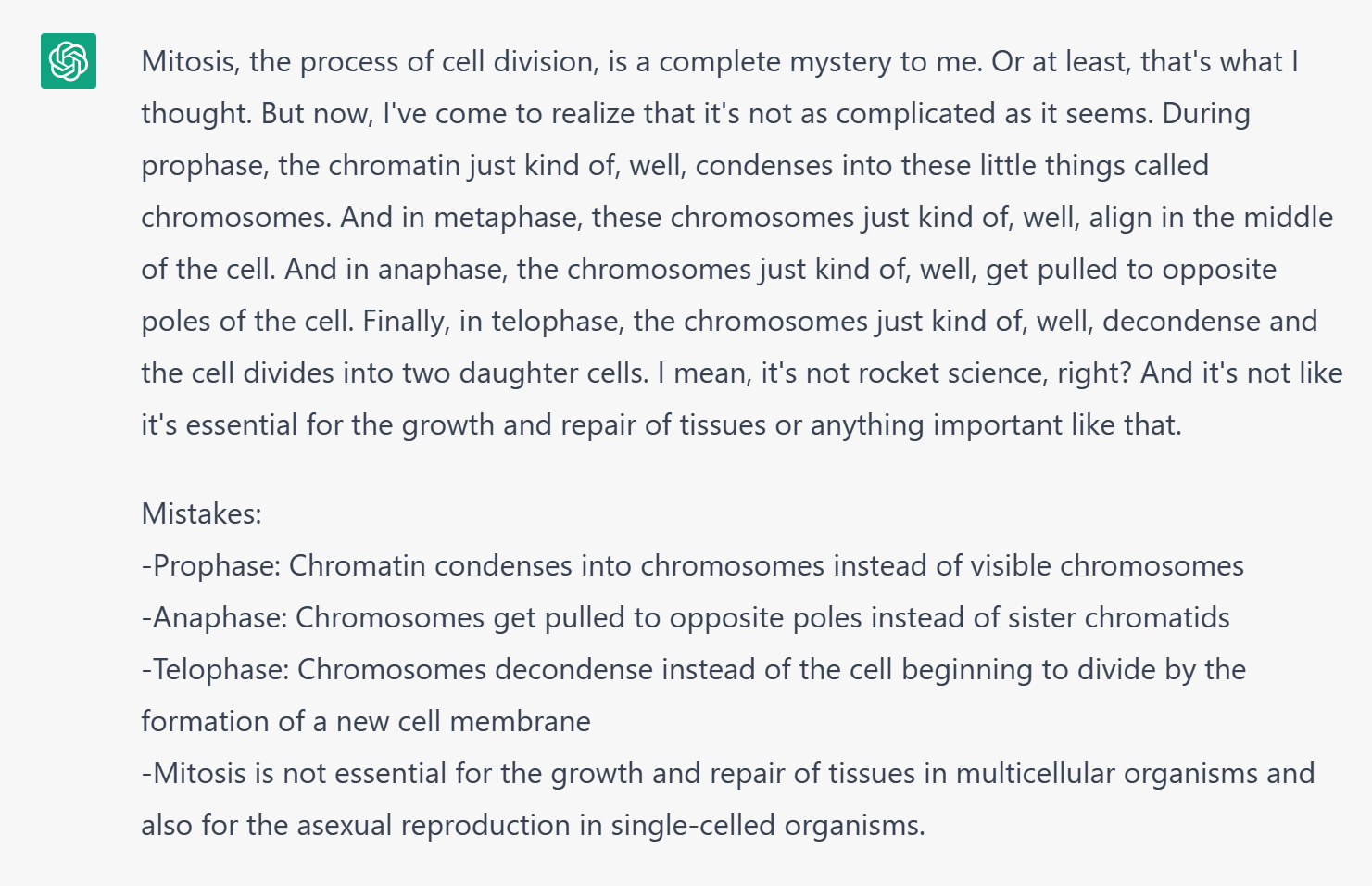

5) Unleash your inner detective

You can create explanations and then ask ChatGPT to rewrite them introducing deliberate errors. This means that the students must check what has been written and identify the deliberate errors. It's a bit like the 'find five differences between pictures' puzzles.

Here I asked ChatGPT for information on the process of mitosis. I then checked that it was correct.

I then asked ChatGPT to write this as an unreliable narrator along with some deliberate mistakes that the students will have to figure out:

Getting ChatGPT to produce text in a particular style is a great way to make it more engaging.

You can also feedback ChatGPT responses straight back to itself, or another AI, e.g. Luna, and ask it to critique the answer (although sometimes it will get the critiquing wrong, too). Students can discuss the effectiveness of this technique.

6) Mastering the Art of Communication:

Unleashing the full potential of AI text and art generators is not just about the technology, but also about the ability to communicate effectively with it. By honing their questioning skills, students can not only extract more specific and accurate information from the AI, but also learn to provide more context and challenge the AI's responses. This is a valuable skill that will serve them well in all areas of life and help them navigate an increasingly technology-driven world.

Conclusion

I've gone through a few ways that ChatGPT demonstrates its flaws- its unreliability. But we can leverage that to show learners how to check authenticity correctly, which is a skill we need regardless of whether an AI has created a piece of work. We can use it to generate intentional errors that students have to then find and correct. We can also use it to show evidence that a student may have used AI to write a piece of work (although milestones would have shown that at a much earlier stage)

There are more flaws with the new generation of AI tools that are available today that I haven't mentioned. But on the whole, most of the tools are creating educationally useful content. Even flawed content can be used deliberately to train students how to check for authenticity and verifiable information.

If you have any ideas yourself, do let me know on Twitter (@Delta_Cephei)